With the rapid boom of artificial intelligence, or AI, in the last few months, universities and educational institutions have scrambled to figure out how to tackle academic dishonesty concerns, responsibly and ethically use and teach about AI, and learn more about the quickly evolving technology.

The reliability of the software, the potential pros and cons of artificial intelligence and the academic integrity concerns it raises have all quickly dominated academic discourse.

Director of the Texas A&M University Writing Center Nancy Vazquez says the quick evolution of ChatGPT has been an important topic of both formal and informal conversation among faculty and staff. Faculty are concerned AI may enable students to engage in dishonest academic behavior.

“One of the concerns is that when you get a university degree, you assume that person is capable of doing certain things, they have certain skills and knowledge, but, potentially, AI clouds that,” Vazquez said.

Even with tools such as Grammarly and Microsoft auto-predicting text, Vazquez said she always recommends consultants at the writing center make sure that students ensure their professors are okay with it.

“Students thinking about using any type of AI for classes or an application need to check with the authorities, whether that is an instructor or a program they’re applying for … What are the policies?” Vazquez said.

Vazquez says there are a range of worries with ChatGPT including the legality of who owns the generated text that AI produces. Additionally, Vazquez said librarians have spent hours looking for sources that were generated by ChatGPT that don’t actually exist. Early versions of ChatGpt offered premium versions after a limited free trial, which also raises questions of access as some students might have the use of tools that other students can’t afford according to Vasquez.

“I also think about AI being useful for generating routine writing that people do … or even [planning] out your writing, or a writing schedule … but also create a renaissance for the personality, human characteristics and creativity that we bring,” Vazquez said.

Currently, the Writing Center makes reference to AI under the plagiarism statement in the course policies section of syllabi tailored toward professors who want to mention AI in their class.

“According to the [A&M] definitions of academic misconduct, plagiarism is the appropriation of another person’s ideas, processes, results or words without giving appropriate credit,” Vazquez said. “You should credit your use of anyone else’s words, graphic images, or ideas using standard citation styles. AI text generators such as ChatGPT should not be used for any work for this class without explicit permission of the instructor and appropriate attribution.”

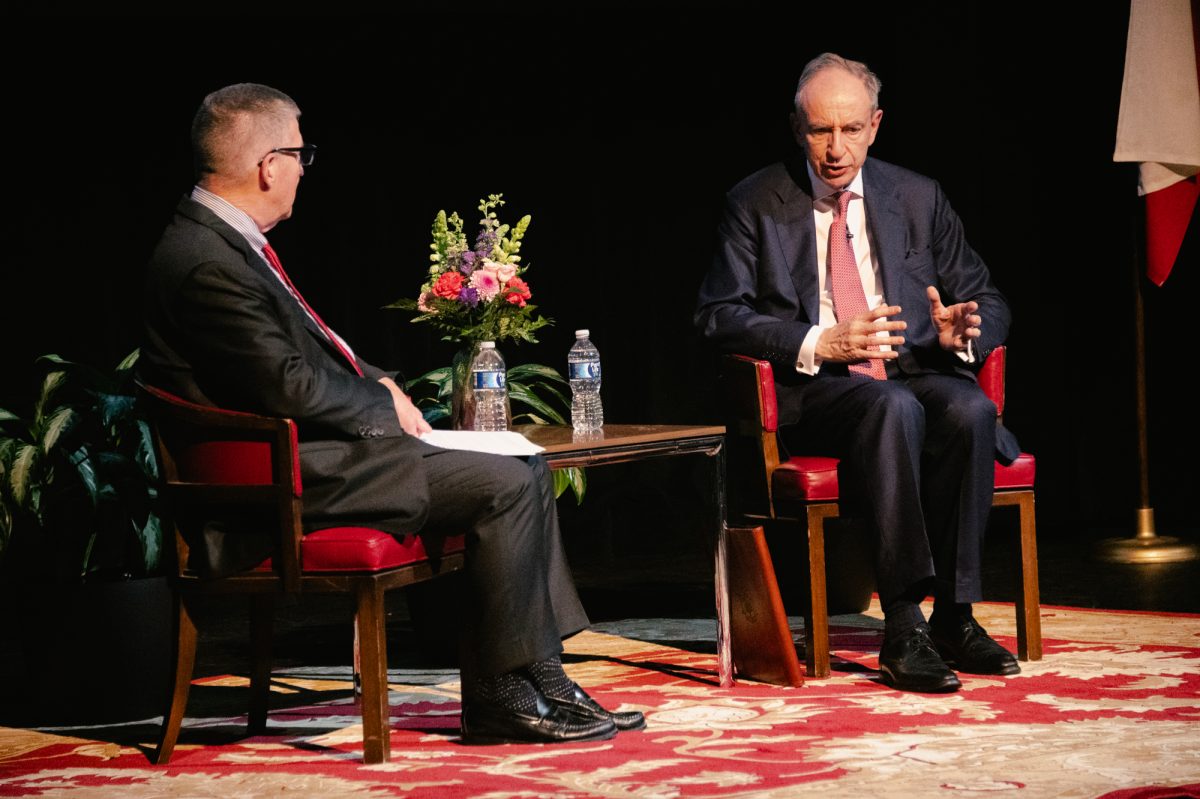

Philosophy professor Kenny Easwaran, Ph.D, said AI mimics the neural network of the brain and is able to deal with language by associating and connecting information. However, it only has a limited memory that can look a few pages back up with GPT-4 compared to only paragraphs with GPT-2.

“This is an associonist and connectionist neural network-based system, and this is one of the main criticisms … that this is not going to get us real artificial intelligence,” Easwaran said. “It looks really good, but that is just because it can battle convincingly.”

The associationist and connectionist thinking that artificial intelligence is able to do rather quickly is also something that humans do, Easwaran said. However, it is harder to replicate symbolic reasoning that is oftentimes slower and used for things like math according to Easwaran.

“One of the things that we try to develop in higher education is to get people to use this slow and effortful symbolic reasoning,” Easwaran said.

Easwaran said because ChatGPT pulls from information that people have given it, it writes based on recognized patterns and memorized information. However, it is not as good at understanding arguments or why premises either support or don’t support a conclusion, Easwaran said.

“It can do certain things like write a recommendation letter, or a memo … but if you do something like original intellectual writing, it can’t do that,” Easwaran said.

Easwaran said he suspects that in the same way people use spreadsheets electronically now and nobody writes by hand anymore, in five to 10 years, AI is going to be a more normalized part of writing papers. Though he said he doesn’t know how exactly it will evolve in the future, it is important for students to understand that in the same way that Wikipedia can be a good source of information, ChatGPT is not perfect and does have errors.