Artificial intelligence, or AI, has taken the world by storm, and Texas A&M is beginning to adapt.

What began as a controversial tool is now being used to teach, learn, research and work at A&M, according to a university website about “harnessing AI.” Professors and students alike have begun integrating AI into their research and coursework.

Andrew Dessler, an atmospheric sciences professor and the director of the Texas Center for Extreme Weather, said that AI is here to stay and that banning it in the classroom would be counterproductive.

“Faculty need to figure out ways to include AI in assignments in ways that still, you know, teach students critical thinking skills and writing skills,” Dessler said. “The skills that we’ve always been trying to teach them but in a way that incorporates AI. Because trying to pretend it doesn’t exist or trying to tell the students not to use it is not going to work.”

Dessler also emphasized the importance of understanding AI in the corporate world, as it is quickly becoming a key tool within most careers. Dessler said he wants to incorporate AI into his students’ learning objectives, treating it like any other skill that may prepare them for the workforce.

“It’s a tool that’s going to be more and more widely used,” Dessler said. “And, you know, as a university, we should be educating students on how to use tools.”

While chatbots are the most popular form of AI and are recognizable by the mainstream audience, it isn’t the only form Dessler takes advantage of in his classroom.

“There’s a whole field of AI … I would more generally refer to as machine learning, which is a technique basically for modeling data,” Dessler said.

Dessler believes the world will run on machine learning in the near future, so he encourages all STEM majors to have exposure to the tool.

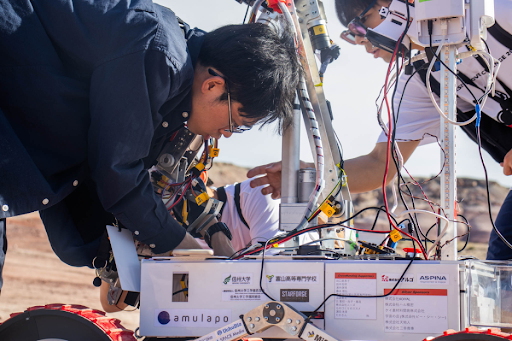

First-year nuclear physics Ph.D. student Anthony Lestone uses AI in his research at the Texas A&M Cyclotron Institute. Initially encouraged to use Github Copilot, Lestone convinced his mentor to permit the use of ChatGPT Plus for writing code.

“I think virtually every graduate student that I work with uses AI in some way to write code,” Lestone said.

Lestone gave a highly attended presentation at the Cyclotron Institute on the effectiveness of AI in his research, introducing many professors and students to the benefits of AI for the first time.

While Lestone openly supports the use of AI in research under certain conditions, he believes there are ethical limits.

“In my world, writing code to do data analysis is a good use of AI, but using AI to write your paper for you would be a terrible use,” Lestone said.

Associate Department Head for the Department of English Andrew Pilsch supports an ethical approach to AI but also sees potential dangers in its use.

“I worry that students are being encouraged by many AI companies to use these tools in an unthinking manner, as a source of a quick answer or a solution you don’t have to think about,” Pilsch wrote in an email. “I want to design courses that encourage students if we’re going to use AI tools, to understand why we’re using them and to incorporate them into our workflow rather than to use them as a replacement for things we would otherwise have done ourselves.”

Although he recognizes the dangers, Pilsch isn’t against integrating AI in the classroom.

“It is important to me as a rhetoric teacher for students to have a critical understanding of the tools they use to communicate, whether that’s sitting down to write a traditional essay or posting a video to TikTok,” Pilsch wrote. “Understanding how persuasion happens involves understanding which tools are best for the job; AI is no different.”

Pilsch wrote that AI should not be banned entirely but rather limited on a class-by-class basis. When teaching writing courses, Pilsch has asked his students to avoid AI. However, in his “Digital Authoring Class,” he uses an AI programming aid to guide students in the right direction when making a website.

Despite its usefulness as a tool, Pilsch doesn’t think it should be used without permission from the instructor, however.

“The usage of AI tools without express permission from the instructor and passing off the work of a computer program as your own is still plagiarism, just as it would be if a student paid a homework help service for a human to complete the assignment,” Pilsch wrote. “I think the other thing that’s important is establishing dialogue; I dislike the way AI is often used in classes in a sneaky fashion. If we were having more open communication about how we might use AI tools, I think everyone in the university would be better equipped to work with them in their classes.”